Diagnosis of Malaria Infection with or without Disease

Zeno Bisoffi, Federico Gobbi, Dora Buonfrate and Jef Van den Ende1

Centre for Tropical Diseases, Ospedale Sacro cuore, Negrar (Verona), Italy

1 Department of Clinical Sciences, Institute of Tropical Medicine, Antwerp, Belgium

1 Department of Clinical Sciences, Institute of Tropical Medicine, Antwerp, Belgium

Correspondence

to: Zeno Bisoffi. Centre for Tropical Diseases, Ospedale Sacro Cuore, Negrar (Verona), Italy.

E-mail: zeno.bisoffi@sacrocuore.it

E-mail: zeno.bisoffi@sacrocuore.it

Published: May 9, 2012

Received: March 1, 2012

Accepted: March 28, 2012

Mediterr J Hematol Infect Dis 2012, 4(1): e2012036, DOI 10.4084/MJHID.2012.036

This article is available on PDF format at:

This is an Open Access article

distributed under the terms of the

Creative Commons Attribution License

(http://creativecommons.org/licenses/by/2.0),

which permits

unrestricted use, distribution, and reproduction in any medium,

provided the original work is properly cited

Abstract

The

revised W.H.O. guidelines for malaria management in endemic countries

recommend that treatment should be reserved to laboratory confirmed

cases, both for adults and children. Currently the most widely used

tools are rapid diagnostic tests (RDTs), that are accurate and reliable

in diagnosing malaria infection. However, an infection is not

necessarily a clinical malaria, and RDTs may give positive results in

febrile patients who have another cause of fever. Excessive reliance on

RDTs may cause overlooking potentially severe non malarial febrile

illnesses (NMFI) in these cases. In countries or areas where

transmission intensity remains very high, fever management in children

(especially in the rainy season) should probably remain presumptive, as

a test-based management may not be safe, nor cost effective. In

contrast, in countries with low transmission, including those

targeted for malaria elimination, RDTs are a key resource to limit

unnecessary antimalarial prescription and to identify pockets of

infected individuals. Research should focus on very sensitive tools for

infection on one side, and on improved tools for clinical management on

the other, including biomarkers of clinical malaria and/or of

alternative causes of fever.

Background

Malaria management policies in most sub-Saharan countries traditionally relied on presumptive diagnosis without laboratory confirmation, basically considering any fever as malaria, particularly in children, being the most vulnerable to acute malaria.[1] This was basically due to the lack of laboratory facilities at the peripheral level of the health system (Primary Health Care or P.H.C.). The diagnosis was then barely clinical, and while over treatment was an obvious consequence, the rationale behind such empirical management was the need to avoid missing possible malaria cases, especially in children, with potentially fatal consequences. Moreover, the treatment used in most countries until the beginning of the new millennium was chloroquine, a cheap and affordable drug, albeit increasingly ineffective against Plasmodium falciparum due to the spread of selected resistant strains. During the last decade, the new antimalarial Artemisinin Combined Treatments (ACT), that are extraordinarily effective but also orders of magnitude more expensive, have become the first choice for P. falciparum malaria almost everywhere.[2] Presumptive treatment of all fevers with ACT would no more seem a logical and affordable choice, not only for economic reasons, but also because it is feared that a massive use of the new drugs might induce selection and diffusion of artemisinin-resistant strains of P. falciparum. For this reason the WHO policy changed, recommending restricting antimalarial treatment to laboratory confirmed cases.[2]

Unfortunately, the quality of malaria microscopy is far from satisfactory in most countries, especially in sub-Saharan Africa where most of malaria burden is concentrated. Moreover, the availability of microscopy is generally restricted to hospitals and private laboratories, while health centres and dispensaries generally lack any laboratory facility.

Rapid Diagnostic Tests (RDT) for malaria, based on antigen detection, were the potential solution. RDTs cannot be considered a new tool any more. The first tests, based on the detection of histidine rich protein (HRP-2) antigen of P. falciparum, were already commercially available at the beginning of the nineties,[3] though much too expensive to be introduced in low to middle income countries (LMIC). They proved to be highly sensitive and specific, though with some false positives, especially in patients with a positive rheumathoid arthritis test.[4] In following years, many new tests were developed, based either on HRP-2 or on lactic dehydrogenase (p-LDH), some of them restricted to P. falciparum, others capable of detecting specific antigens of the other malaria species, too. Initially developed as a potential tool for travellers to malaria endemic countries, the new tools were then progressively introduced and tested in the field, while their cost tended to diminish in parallel with an increased production and diffusion. RDTs were submitted to evaluation in many malarious countries,[5-10] and were generally found to be accurate and cost – effective if compared to the previous, presumptive malaria diagnosis. The W.H.O. then recommended that, if and where microscopy was not available, RDTs should be obligatorily used in case of suspected malaria, and treatment should be restricted to positives.[2] Initially, the new policy was indicated for older (> 5 years) chidren and adults, while the presumptive policy remained the recommended one for small children, on ground of a higher vulnerability to malaria. This empirical approach appeared reasonable. Missing a malaria diagnosis in an infant might be fatal, while this is less so, at least in high transmission settings, for older children and adults. Moreover, as the ACT dosage is related to the patient weight, the treatment of an adult is considerably more expensive than that of a child, and is generally higher than the cost of a RDT, too, while this is not necessarily true for the paediatric dosage.

Malaria infection versus malaria disease

Infection does not necessarily mean disease, and this is true for many different etiologies and potential pathogens: viruses, bacteria, fungi, protozoa and helminths. As far as malaria is concerned, the infection may be characterised, for example, by the persistence of P. vivax or P. ovale “hypnozoites” in the liver, where they remain totally harmless for months or even years, until they eventually cause a relapse, reaching again the erythrocytes.[11] For P. falciparum the liver stage is limited to the initial invasion, and the hypnozoite phenomenon does not occur. P. falciparum infection means the presence of trophozoites and schizonts in blood, where they undergo an asexual replication in RBCs, eventually destroying them when the schizonts rupture, liberating new merozoites which will infect new RBCs. The crucial event is the schizont (including the host RBC) rupture with the following delivery of substances both of the host RBC and of the plasmodium, which trigger cascades, largely mediated by several cytokines, responsible for the fever and the other main pathological aspects of malaria.[12] The intensity of the erythrocitic cycle depends on several factors, the crucial one being the level of immunity of the infected subject which is related to previous exposure (the so called “premunition”).[13] In areas with high intensity of transmission, acute and potentially fatal attacks are typical of infants and young children, while older children and adults are at least partially protected by premunition. They are still exposed to malaria, but acute attacks are less frequent and less severe. Moreover, a high proportion of apparently healthy subjects are carriers of malaria parasites in blood.

What is clinical malaria? How to define malaria disease as opposed to simple malaria infection? The most honest answer to this simple question would be, surprisingly enough: we don’t know.

Malaria disease: fever plus plasmodia in blood?

For practical purposes, one could argue that clinical malaria is a fever (alone or with various combinations of other symptoms and signs) with presence of plasmodia in blood. Unfortunately, what is simple is not always true.

In a rural health centre of Banfora province, in Burkina Faso, during the dry season, a 2-year-old boy is admitted because of high fever, polypnoea and a visible, general prostration. The nurse in charge acts quickly: a malaria RDT is immediately performed and the result is positive. The child is immediately put on intravenous quinine drip. Unfortunately, the situation worsens in a few hours and the child dies the following morning.

Was there anything wrong in the case management? The nurse strictly followed the local guidelines, and i.v. artesunate was not yet available at the time. Clearly, a child may die of severe malaria, even if the management is appropriate, as in our case. But are we really sure that the child actually had malaria?

In endemic countries, the proportion of children who are carriers of malaria parasites in blood is variable, depending on the transmission intensity and the season, and may well be over 50% in some situations.[14,15] This means that a child (or an adult) may have malaria infection despite the absence of any obvious symptom of disease, including fever. Suppose now that this child gets a pneumonia, or a typhoid, or a meningitis: what happens to malaria parasites? Will they disappear from the blood? Certainly not, although a transient decrease in parasite density may occur.[16] This child will have fever plus malaria parasites in blood, and if submitted to a malaria test the result will be positive (and a true positive for that matter): yet, this child will NOT have clinical malaria, and if I am guided by the test result I can make a fatal mistake.

Then, is malaria disease a fever with malaria infection at high parasite density? The parasite density is the number of malaria parasites in the circulation, which is usually expressed per µL (or as percentage of infected RBC) and is usually estimated by microscopy by examining the thick film until we have counted 200 WBC, and all the infected RBC that we detect in the same microscopic fields.[17] This is a rough estimate of course, moreover in severe infection schizonts tend to be sequestrated in the spleen, the liver, the brain and other internal organs and therefore a very high parasite burden may not be mirrored in the peripheral circulation.

It is certainly true that patients with clinical malaria tend to have a higher parasite density than subjects with a simple malaria infection, and there is no doubt that a patient with high fever and a 5% parasite density (or, say, >200,000 per µL) most probably HAS a clinical malaria. But what about a patient with high fever and a lower parasite density? May I exclude clinical malaria? Certainly not. This patient might also have clinical malaria, OR he may be a simple carrier of malaria parasites with another actual cause of fever. How do I know?

Malaria disease: fever plus plasmodia in blood plus other typical symptoms?

Researchers have struggled to find clinical signs and symptoms that are good predictors or excluders of clinical malaria. Unfortunately, the results have been frustrating. Some symptoms such as vomiting may increase the probability of malaria in children, while other symptoms like cough may decrease that probability, but no symptom combination can actually confirm or rule out the suspicion of malaria in a febrile child.[18]

Malaria attributable fever: an epidemiological concept .

Researchers assessing the efficacy of malaria vaccines were confronted with the lack of a case definition of clinical malaria. In order to define the vaccine efficacy, simple malaria infection is not a good indicator: pragmatically, they are not really interested in how many infections are prevented by a vaccine, but rather in how many disease episodes (and related deaths) are potentially avoided by vaccination. Therefore, in order to find a proxy of clinical malaria, the epidemiologists involved in vaccine trials focused on fever, and attempted at estimating, in a given population, what proportion of fevers could be attributed to malaria.[16] The basic concept was the well known epidemiological concept of the attributable risk (AR), or attributable fraction (AF), that aims at estimating, in a longitudinal or in a case-control study, how many cases of a given disease are attributable to one or more risk factors. The formulas are well known and may be retrieved in any epidemiological textbook.[19] We briefly summarize how this is done starting from longitudinal data, that is, from two populations of infected and not infected children and assessing the proportion of fevers occurring in a short time in the two groups.[20] If we call fp the proportion of febrile amongst parasitaemic children, and fp0 the proportion of febrile children amongst aparasitaemiac children, then the AF (or the proportion of fevers attributable to malaria) will be:

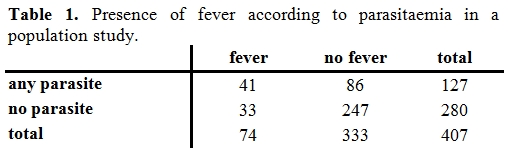

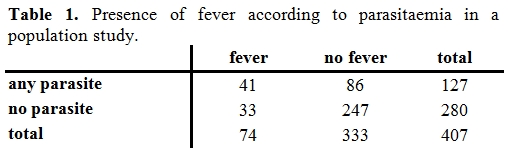

AF=(fp - fp0)/fp

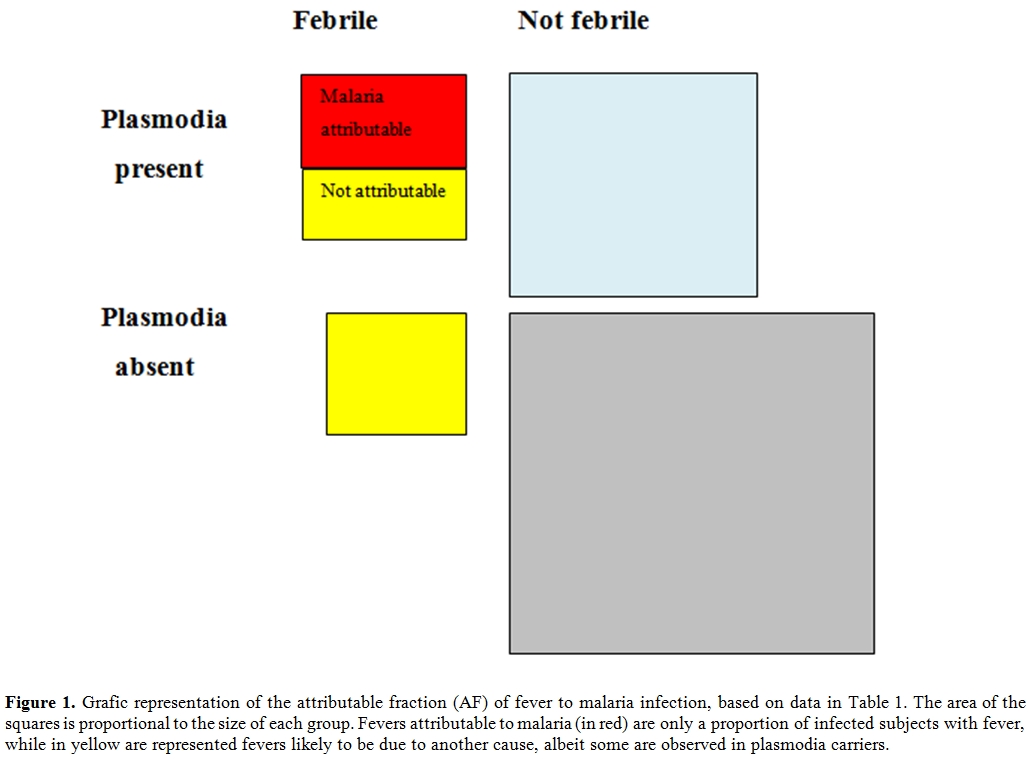

The following example (Table 1) is taken from a population study in children in the Gambia.[21] More parasitaemic than aparasitaemic children have fever (41/127 or 32% versus 33/280 or 11%). But among the 41 febrile patients with malaria parasites in blood, the fever is not caused by malaria in all, as fever is also found in a (albeit smaller) proportion of uninfected children. The proportion of fevers that are attributable to malaria is represented by the AF.

fp =0.32 or 32%

fp0=0.11 or 11%.

AF = (0.32 - 0.11)/0.32 = 0.63.

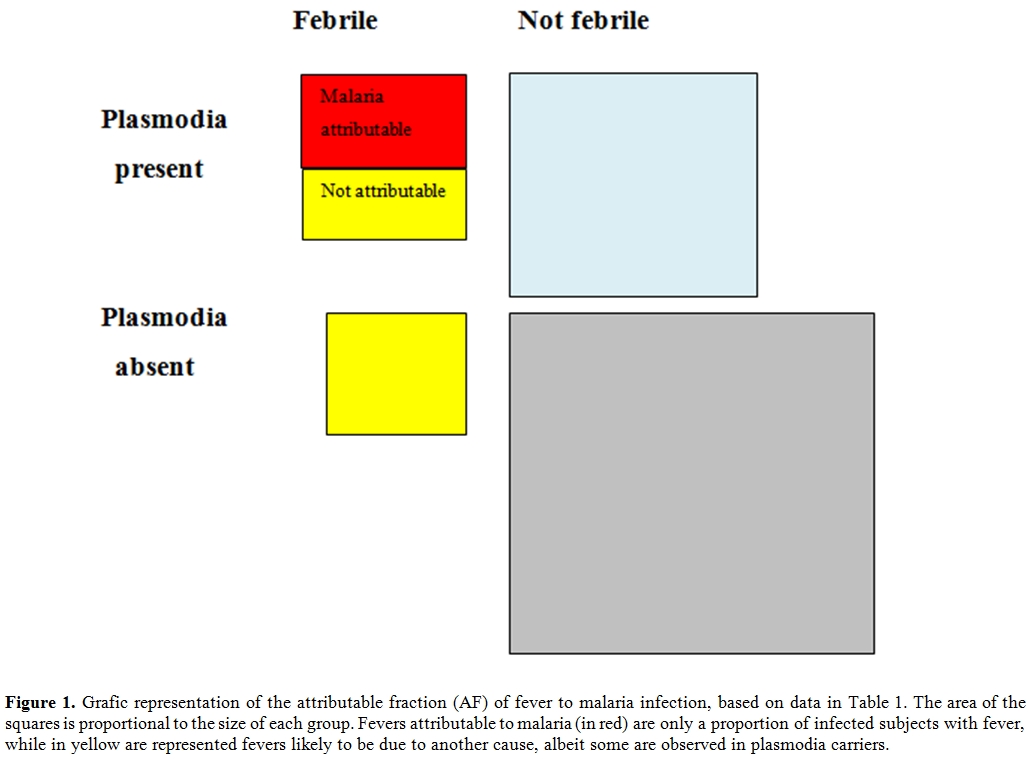

In normal language this means that 63% of children with fever and parasites are presumed to be ill due to malaria, in the other 37% the fever is probably caused by another disease (see Figure 1 for a visual representation).

Table 1. Presence of fever according to parasitaemia in a population study.

Figure 1.Grafic representation of the attributable fraction (AF) of fever to malaria infection, based on data in Table 1. The area of the squares is proportional to the size of each group. Fevers attributable to malaria (in red) are only a proportion of infected subjects with fever, while in yellow are represented fevers likely to be due to another cause, albeit some are observed in plasmodia carriers.

In a given endemic area, only a proportion of fevers among infected subjects is attributable to malaria. This proportion increases with parasite density, and over a given cut-off, virtually all fevers are attributable to malaria. The trouble is that this proportion is variable and depends on several factors, including the intensity of transmission, usually influenced by season and often variable across the years, and on the age of the subjects. Moreover, the AF is an epidemiological concept that cannot be automatically translated into individual diagnosis. In a rural area of Burkina Faso, we estimated that in the rainy season, among children > 5 years with fever and with a P. falciparum parasite density between 400 and 4000/µL, about half cases were attributable to malaria.[22] If I see an individual patient with fever, and with a positive malaria film for P.falciparum, with parasite density say 2000/µL, what I can say is that my patient is about 50% likely to have an acute malaria attack, and 50% likely to be a carrier of malaria parasites in blood with another cause of his current fever.

Malaria infection without disease: plasmodia in blood without fever?

Is asymptomatic malaria infection a harmless condition? Can we define infected subjects without fever as healthy carriers? The answer is no. Unfortunately, not much research has been done on the pathological effects of the long term presence of malaria infection in blood, but a strong epidemiological evidence exists that malaria infection produces pathological effects even in the absence of fever. Anemia is certainly more frequent/severe in children with malaria parasites in blood, and so is splenomegaly,[23] to such an extent that the so called “spleen rate” is a marker of malaria prevalence that is almost as accurate as a lab based survey.[24] Anemia and a big spleen are the most common markers of a “chronic malaria”, and these subjects cannot be defined as “healthy”. The most severe form of chronic malaria is hyper-reactive malarial splenomegaly (HMS), a neglected condition characterized by gross splenomegaly, pancytopenia, and a severely impaired cellular immunity.[25] Plasmodia are present in blood, but at such a low parasite density that they may be missed, both by microscopy and by RDTs. This condition is invariably fatal if not adequately treated, and is probably the tip of the iceberg of the pathologic manifestations of a chronic malaria infection. A striking, indirect epidemiological indication of the harmful effects of malaria infection is the spectacular reduction of child mortality observed by some of the first trials of impregnated bed nets:[26] the prevention of specific malaria mortality explained only a fraction of this reduction, indicating that malaria infection is a predisposing factor to death from other causes. Therefore, malaria infection is likely to produce pathological effects even in the absence of fever, and when it is detected, it should probably be treated.

Diagnosis of malaria infection and disease.

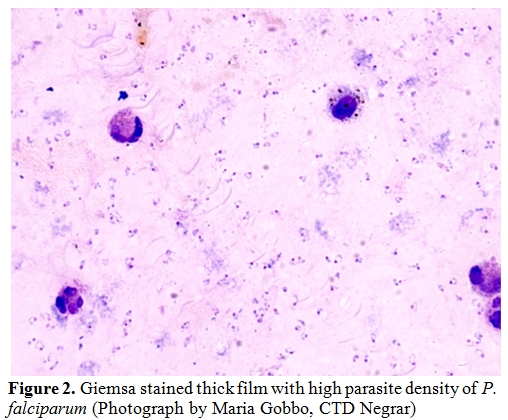

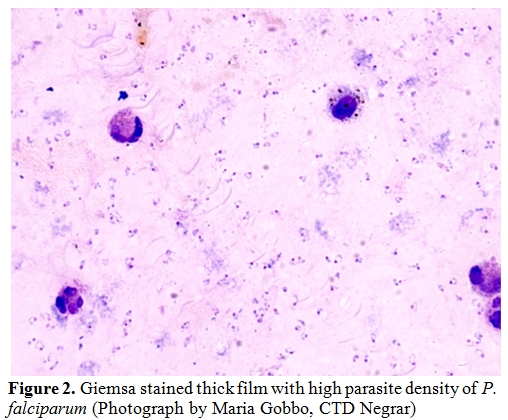

Microscopy is still the gold standard for the diagnosis of malaria infection. The technique of slide preparation, staining and reading are well known and standardized, and so is the estimate of the parasite density, which is an added value of microscopy and that can easily be estimated on a thick film (Figure 2).[17] RDTs are now replacing microscopy almost everywhere in endemic countries at P.H.C. level. A lot of different tests are available on the market and their accuracy is being systematically evaluated by the W.H.O. in partnership with other organizations.[27,28] Several RDTs, both based on HRP-2 and on p-LDH, are virtually 100% sensitive at a comparatively low parasite density (200 parasites/µL), and also highly specific for P. falciparum malaria infection, some of them also for other plasmodia.

Other diagnostic methods do exist, such as the Quantitative Buffy Coat (QBC) and polymerase chain reaction (PCR), but they require adequate laboratory facilities and are not an option for routine use in endemic areas.

For practical purposes, RDTs are presently the main tool for the diagnosis of malaria in the field.

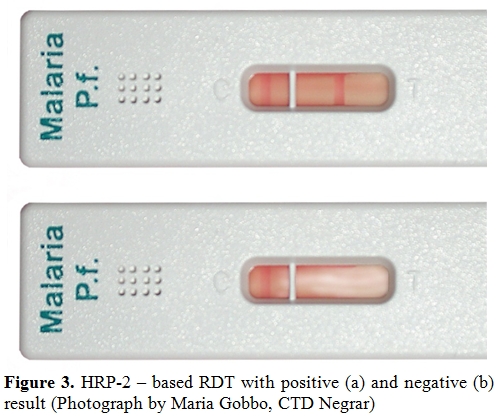

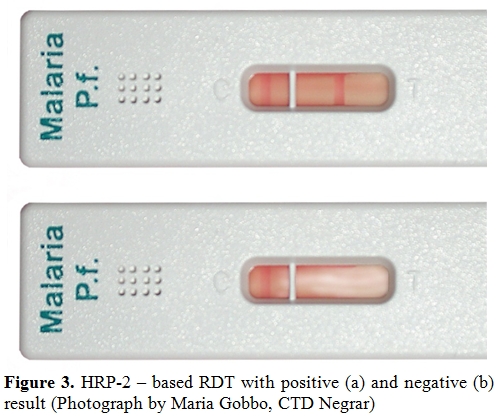

In immunochromatographic RDTs, malaria antigen is captured by monoclonal antibodies conjugated to a dye in a strip of nitrocellulose, causing a clearly visible line to appear. Most tests have a control line, that is the only one that appears in a negative test (Figure 3, a), while in the positive test a second line appears (Figure 3, b), usually within 15 minutes or less, making the reading straigthforward and reproducible, contrarily to microscopy. This is therefore an ideal tool in the field.

The systematic use of RDTs, now recommended by the WHO both for children and adults,[29] should limit the prescription of antimalarials to those who really need it, thus diminishing the costs of malaria control programmes and preserving the efficacy of artemisinin-based drug combinations. Moreover, by excluding malaria as the cause of fever in RDT-negative subjects, health workers should take into account alternative causes of fever, also considering that in many countries malaria incidence has sharply declined and only a small proportion of all fevers are due to malaria.[30-33]

The new WHO policy has been preceded by a quite hot debate in the malaria community,[34,35] and has been largely based on convincing results in countries where the decrease in malaria incidence had been dramatic, such as in Tanzania.[36,37]

The extension of this policy to countries where this decline has not yet occurred is questionable, though.

Figure 2. Giemsa stained thick film with high parasite density of P. falciparum (Photograph by Maria Gobbo, CTD Negrar)

Figure 3. HRP-2 – based RDT with positive (a) and negative (b) result (Photograph by Maria Gobbo, CTD Negrar)

Accuracy and cost effectiveness of RDTs for the diagnosis of malaria infection and of malaria-attributable fever.

RDTs are an accurate tool for the diagnosis of malaria infection.[5-10,20,25,26] Their sensitivity is sufficiently high to rule out malaria as the cause of fever in most instances, as they only miss very low parasite densities that are generally of no clinical significance. Nevertheless, in infants and young children the proportion of fevers attributable to malaria is very high even at the lowest parasite densities, that may be missed by RDTs.[20,38] Moreover, in rare occasions, even a high or very high parasite density may be missed by a RDT, due to the so-called prozone effect[39] or to other reasons. However, in general, refraining from malaria treatment in case of a negative RDT appears to be reasonably safe.[35] Specificity is a more complex matter. It has been assessed by many studies on malaria infection and generally found to be very high, meaning that when a RDT result is positive, plasmodia are in fact present in the blood. However, contrarily to traditional microscopy, RDTs do not provide any estimate of the parasite density, and their specificity for malaria-attributable fever is highly variable, being very low in some epidemiological contexts.[20] This means in practical terms that a patient with fever AND a positive RDT can have malaria, OR an alternative cause of fever. Prior to RDT introduction, clinical officers, who are most often nurses at the peripheral level of endemic countries, used diagnostic algorithms based on clinical symptoms and signs only, and they often treated a febrile patient with both an antimalarial and an antibiotic if malaria and a bacterial fever were both possible, according to their judgement. The use of RDTs should limit this “double prescription” by treating only with antimalarials those with a positive test, and with only antibiotics those who test negative for malaria, and have symptoms and signs indicating a possible bacterial cause.

While the latter option is probably reasonably safe, the former one is not. If a child with a fever due, say, to pneumonia is tested, and the RDT result is positive, the nurse may be suggested to treat only for malaria, while on clinical grounds he/she would have probably treated with both options.

As far as cost effectiveness is concerned, most studies have concluded that a RDT-based malaria management policy is cost-effective if compared to the presumptive management.[40-47] However, a major flaw of most of these studies was the implicit assumption health workers would fully adhere to the (negative) test result, while at least in some countries it was clearly shown that a high proportion of febrile patients testing negative were nevertheless treated for malaria.[48,49] Other researchers found more encouraging results, however in contexts where malaria incidence had dropped.[34,50] Where and when malaria incidence is still high, such as in most of West Africa in the rainy season, a RDT-based policy was shown to be less cost-effective than the presumptive approach, and potentially harmful, especially for the possible consequences of a false positive result on the antibiotic prescription for non malarial febrile illnesses.[51]

A further limitation to cost-effectiveness of RDTs is the general tendency of using them on patients without fever, too,[48,52] causing a waste of resources. Moreover, although we have seen that malaria infection without fever is not necessarily harmless, extending the use of antimalarials to afebrile patients would increase the risk of selection of drug resistant strains, that is exactly what the test-based policy should limit, instead.

An evidence-based approach to malaria diagnosis and management.

Malaria management policies cannot be the same everywhere, regardless the epidemiological context. Where malaria incidence is (or has become) low or very low, RDTs have clearly a key role in limiting unnecessary malaria prescriptions. Refraining from malaria treatment in such contexts is most probably safe.[34,35] Health workers should however be properly trained on how to deal with the positive result: if the clinical picture is suggestive of a possible bacterial cause of fever, a double treatment (antimalarial plus antibiotic) is justified, as a positive RDT might be a false positive in clinical terms, by detecting malaria infection in a patient who actually has another acute disease. Clinical guidelines, including the Integrated Management of Childhood Ilnesses (IMCI), should introduce RDTs at the right step. The node on treatment decision for NMFI should come before the RDT node in clinical algorithms, and the RDT result should be used as a guide for malaria treatment, but not to exclude other potential causes of fever.[49]

In countries targeted for malaria elimination,[53] RDTs and, potentially, new and even more sensitive diagnostic tools, may also be useful to identify pockets of infected people, regardless the presence of fever, and treat them, both to their benefit and that of the community. [54]

In contrast, in countries, or areas within countries, where malaria is still hyperendemic or holoendemic, the presumptive, clinical approach should be maintained, at least for children, and RDTs should not be used. For example, in a recent study in Burkina Faso during the rainy season, it was found that almost 90% of febrile children had a positive RDT.[20] The device tested was Paracheck® Device, that is not one of the most sensitive RDTs according to W.H.O. assessment.[25,26] With a more sensitive test the percentage of positive results would have probably approached 100%, making it totally useless as a decisional tool. It is clear that in such a situation using the test would only add the unnecessary costs of the test to that of the treatment, that would be administered to febrile children anyway.

Diagnosing imported malaria in non endemic countries.

What might be a correct approach for suspected imported malaria in a non endemic country? In this field of medicine, except for patients recently immigrating from holo-endemic countries, malaria infection equals malaria disease. Here unlimited diagnostic and therapeutical means are the rule. Comparison between different diagnostic tools is difficult: if microscopy remains the gold standard (reference test), the judgment will depend on how many fields have been examined. Currently more or less 100 high magnification fields are standard, but in some cases a diagnosis is made after careful scrutinizing a thick film during one or two hours, to find finally only one parasite! This, especially when P.ovale is at stake, or when a treatment, even a not specific one, e.g. with an antibiotic with antimalarial action such as cotrimoxazole, has already been started. PCR is highly sensitive, but will probably not outperform this lengthy microscopical search. A multi centre diagnostic study carried out by GISPI network in Italy confirmed that expert microscopy still remains the mainstay of the diagnosis of imported malaria, and this, particularly in mixed or in non falciparum infections, where it still outperforms alternative methods, including PCR.[55]

However, advanced diagnostic tools and expertise are not necessarily available in all care centers. In peripheral centers PCR is not available, and lab technicians lack expertise in microscopy. Moreover, malaria treatment should be initiated as soon as possible; diagnosis cannot wait until the next morning. As a consequence, RDTs have a substantial role in imported malaria: they are easy to perform, recent brands distinguish between falciparum and non falciparum, they have a sensitivity high enough to exclude a clinically important, imminent life threatening, falciparum infection. A further advantage is that a recent treatment does not necessarily hamper RDT sensitivity. National quality control of accuracy, compared to thick film simultaneously taken, is imperative since many caveats exist for correct use and interpretation.

Conclusions and research needs.

In an expert meeting at the European Commission DG research in 2010,[56] experts were asked what the most relevant research needs on malaria diagnostics were. The basic conclusion was that we have already good tools for the diagnosis of malaria infection. However, in the context of malaria elimination programmes, research should focus on the development of even more sensitive tools, and ones that are able to detect also asymptomatic carriers (of gametocytes and not only asexual forms), thus contributing to detect pocket of infected individuals that can be successfully treated.[57] For clinical management however, existing tools are already enough sensitive, as they are able to detect the vast majority of malaria-attributable fevers. The problem is the lack of specificity for clinical malaria, especially in high transmission settings. An ideal diagnostic tool would detect malaria infection as well as identify biomarkers of clinically significant malaria (capable of distinguishing disease from simple infection). Potential biomarkers have already been identified. In addition, an improved RDT for malaria would be able to give a semi-quantitative assessment of the parasite density. Alternatively, research should focus on incorporating in the same device markers of more than one disease, for example with the addition of a biomarker of bacterial disease to a malaria RDT.

One may wonder, though, if excessive reliance in new technology will not cause the definitive abandon of basic, microscopy-based laboratory in the field, that could still be an invaluable tool not only for the diagnosis of malaria, but also of TB, meningitis and other diseases, provided that adequate training, supervision and quality control are ensured.

Malaria management policies in most sub-Saharan countries traditionally relied on presumptive diagnosis without laboratory confirmation, basically considering any fever as malaria, particularly in children, being the most vulnerable to acute malaria.[1] This was basically due to the lack of laboratory facilities at the peripheral level of the health system (Primary Health Care or P.H.C.). The diagnosis was then barely clinical, and while over treatment was an obvious consequence, the rationale behind such empirical management was the need to avoid missing possible malaria cases, especially in children, with potentially fatal consequences. Moreover, the treatment used in most countries until the beginning of the new millennium was chloroquine, a cheap and affordable drug, albeit increasingly ineffective against Plasmodium falciparum due to the spread of selected resistant strains. During the last decade, the new antimalarial Artemisinin Combined Treatments (ACT), that are extraordinarily effective but also orders of magnitude more expensive, have become the first choice for P. falciparum malaria almost everywhere.[2] Presumptive treatment of all fevers with ACT would no more seem a logical and affordable choice, not only for economic reasons, but also because it is feared that a massive use of the new drugs might induce selection and diffusion of artemisinin-resistant strains of P. falciparum. For this reason the WHO policy changed, recommending restricting antimalarial treatment to laboratory confirmed cases.[2]

Unfortunately, the quality of malaria microscopy is far from satisfactory in most countries, especially in sub-Saharan Africa where most of malaria burden is concentrated. Moreover, the availability of microscopy is generally restricted to hospitals and private laboratories, while health centres and dispensaries generally lack any laboratory facility.

Rapid Diagnostic Tests (RDT) for malaria, based on antigen detection, were the potential solution. RDTs cannot be considered a new tool any more. The first tests, based on the detection of histidine rich protein (HRP-2) antigen of P. falciparum, were already commercially available at the beginning of the nineties,[3] though much too expensive to be introduced in low to middle income countries (LMIC). They proved to be highly sensitive and specific, though with some false positives, especially in patients with a positive rheumathoid arthritis test.[4] In following years, many new tests were developed, based either on HRP-2 or on lactic dehydrogenase (p-LDH), some of them restricted to P. falciparum, others capable of detecting specific antigens of the other malaria species, too. Initially developed as a potential tool for travellers to malaria endemic countries, the new tools were then progressively introduced and tested in the field, while their cost tended to diminish in parallel with an increased production and diffusion. RDTs were submitted to evaluation in many malarious countries,[5-10] and were generally found to be accurate and cost – effective if compared to the previous, presumptive malaria diagnosis. The W.H.O. then recommended that, if and where microscopy was not available, RDTs should be obligatorily used in case of suspected malaria, and treatment should be restricted to positives.[2] Initially, the new policy was indicated for older (> 5 years) chidren and adults, while the presumptive policy remained the recommended one for small children, on ground of a higher vulnerability to malaria. This empirical approach appeared reasonable. Missing a malaria diagnosis in an infant might be fatal, while this is less so, at least in high transmission settings, for older children and adults. Moreover, as the ACT dosage is related to the patient weight, the treatment of an adult is considerably more expensive than that of a child, and is generally higher than the cost of a RDT, too, while this is not necessarily true for the paediatric dosage.

Malaria infection versus malaria disease

Infection does not necessarily mean disease, and this is true for many different etiologies and potential pathogens: viruses, bacteria, fungi, protozoa and helminths. As far as malaria is concerned, the infection may be characterised, for example, by the persistence of P. vivax or P. ovale “hypnozoites” in the liver, where they remain totally harmless for months or even years, until they eventually cause a relapse, reaching again the erythrocytes.[11] For P. falciparum the liver stage is limited to the initial invasion, and the hypnozoite phenomenon does not occur. P. falciparum infection means the presence of trophozoites and schizonts in blood, where they undergo an asexual replication in RBCs, eventually destroying them when the schizonts rupture, liberating new merozoites which will infect new RBCs. The crucial event is the schizont (including the host RBC) rupture with the following delivery of substances both of the host RBC and of the plasmodium, which trigger cascades, largely mediated by several cytokines, responsible for the fever and the other main pathological aspects of malaria.[12] The intensity of the erythrocitic cycle depends on several factors, the crucial one being the level of immunity of the infected subject which is related to previous exposure (the so called “premunition”).[13] In areas with high intensity of transmission, acute and potentially fatal attacks are typical of infants and young children, while older children and adults are at least partially protected by premunition. They are still exposed to malaria, but acute attacks are less frequent and less severe. Moreover, a high proportion of apparently healthy subjects are carriers of malaria parasites in blood.

What is clinical malaria? How to define malaria disease as opposed to simple malaria infection? The most honest answer to this simple question would be, surprisingly enough: we don’t know.

Malaria disease: fever plus plasmodia in blood?

For practical purposes, one could argue that clinical malaria is a fever (alone or with various combinations of other symptoms and signs) with presence of plasmodia in blood. Unfortunately, what is simple is not always true.

In a rural health centre of Banfora province, in Burkina Faso, during the dry season, a 2-year-old boy is admitted because of high fever, polypnoea and a visible, general prostration. The nurse in charge acts quickly: a malaria RDT is immediately performed and the result is positive. The child is immediately put on intravenous quinine drip. Unfortunately, the situation worsens in a few hours and the child dies the following morning.

Was there anything wrong in the case management? The nurse strictly followed the local guidelines, and i.v. artesunate was not yet available at the time. Clearly, a child may die of severe malaria, even if the management is appropriate, as in our case. But are we really sure that the child actually had malaria?

In endemic countries, the proportion of children who are carriers of malaria parasites in blood is variable, depending on the transmission intensity and the season, and may well be over 50% in some situations.[14,15] This means that a child (or an adult) may have malaria infection despite the absence of any obvious symptom of disease, including fever. Suppose now that this child gets a pneumonia, or a typhoid, or a meningitis: what happens to malaria parasites? Will they disappear from the blood? Certainly not, although a transient decrease in parasite density may occur.[16] This child will have fever plus malaria parasites in blood, and if submitted to a malaria test the result will be positive (and a true positive for that matter): yet, this child will NOT have clinical malaria, and if I am guided by the test result I can make a fatal mistake.

Then, is malaria disease a fever with malaria infection at high parasite density? The parasite density is the number of malaria parasites in the circulation, which is usually expressed per µL (or as percentage of infected RBC) and is usually estimated by microscopy by examining the thick film until we have counted 200 WBC, and all the infected RBC that we detect in the same microscopic fields.[17] This is a rough estimate of course, moreover in severe infection schizonts tend to be sequestrated in the spleen, the liver, the brain and other internal organs and therefore a very high parasite burden may not be mirrored in the peripheral circulation.

It is certainly true that patients with clinical malaria tend to have a higher parasite density than subjects with a simple malaria infection, and there is no doubt that a patient with high fever and a 5% parasite density (or, say, >200,000 per µL) most probably HAS a clinical malaria. But what about a patient with high fever and a lower parasite density? May I exclude clinical malaria? Certainly not. This patient might also have clinical malaria, OR he may be a simple carrier of malaria parasites with another actual cause of fever. How do I know?

Malaria disease: fever plus plasmodia in blood plus other typical symptoms?

Researchers have struggled to find clinical signs and symptoms that are good predictors or excluders of clinical malaria. Unfortunately, the results have been frustrating. Some symptoms such as vomiting may increase the probability of malaria in children, while other symptoms like cough may decrease that probability, but no symptom combination can actually confirm or rule out the suspicion of malaria in a febrile child.[18]

Malaria attributable fever: an epidemiological concept .

Researchers assessing the efficacy of malaria vaccines were confronted with the lack of a case definition of clinical malaria. In order to define the vaccine efficacy, simple malaria infection is not a good indicator: pragmatically, they are not really interested in how many infections are prevented by a vaccine, but rather in how many disease episodes (and related deaths) are potentially avoided by vaccination. Therefore, in order to find a proxy of clinical malaria, the epidemiologists involved in vaccine trials focused on fever, and attempted at estimating, in a given population, what proportion of fevers could be attributed to malaria.[16] The basic concept was the well known epidemiological concept of the attributable risk (AR), or attributable fraction (AF), that aims at estimating, in a longitudinal or in a case-control study, how many cases of a given disease are attributable to one or more risk factors. The formulas are well known and may be retrieved in any epidemiological textbook.[19] We briefly summarize how this is done starting from longitudinal data, that is, from two populations of infected and not infected children and assessing the proportion of fevers occurring in a short time in the two groups.[20] If we call fp the proportion of febrile amongst parasitaemic children, and fp0 the proportion of febrile children amongst aparasitaemiac children, then the AF (or the proportion of fevers attributable to malaria) will be:

AF=(fp - fp0)/fp

The following example (Table 1) is taken from a population study in children in the Gambia.[21] More parasitaemic than aparasitaemic children have fever (41/127 or 32% versus 33/280 or 11%). But among the 41 febrile patients with malaria parasites in blood, the fever is not caused by malaria in all, as fever is also found in a (albeit smaller) proportion of uninfected children. The proportion of fevers that are attributable to malaria is represented by the AF.

fp =0.32 or 32%

fp0=0.11 or 11%.

AF = (0.32 - 0.11)/0.32 = 0.63.

In normal language this means that 63% of children with fever and parasites are presumed to be ill due to malaria, in the other 37% the fever is probably caused by another disease (see Figure 1 for a visual representation).

Table 1. Presence of fever according to parasitaemia in a population study.

Figure 1.Grafic representation of the attributable fraction (AF) of fever to malaria infection, based on data in Table 1. The area of the squares is proportional to the size of each group. Fevers attributable to malaria (in red) are only a proportion of infected subjects with fever, while in yellow are represented fevers likely to be due to another cause, albeit some are observed in plasmodia carriers.

In a given endemic area, only a proportion of fevers among infected subjects is attributable to malaria. This proportion increases with parasite density, and over a given cut-off, virtually all fevers are attributable to malaria. The trouble is that this proportion is variable and depends on several factors, including the intensity of transmission, usually influenced by season and often variable across the years, and on the age of the subjects. Moreover, the AF is an epidemiological concept that cannot be automatically translated into individual diagnosis. In a rural area of Burkina Faso, we estimated that in the rainy season, among children > 5 years with fever and with a P. falciparum parasite density between 400 and 4000/µL, about half cases were attributable to malaria.[22] If I see an individual patient with fever, and with a positive malaria film for P.falciparum, with parasite density say 2000/µL, what I can say is that my patient is about 50% likely to have an acute malaria attack, and 50% likely to be a carrier of malaria parasites in blood with another cause of his current fever.

Malaria infection without disease: plasmodia in blood without fever?

Is asymptomatic malaria infection a harmless condition? Can we define infected subjects without fever as healthy carriers? The answer is no. Unfortunately, not much research has been done on the pathological effects of the long term presence of malaria infection in blood, but a strong epidemiological evidence exists that malaria infection produces pathological effects even in the absence of fever. Anemia is certainly more frequent/severe in children with malaria parasites in blood, and so is splenomegaly,[23] to such an extent that the so called “spleen rate” is a marker of malaria prevalence that is almost as accurate as a lab based survey.[24] Anemia and a big spleen are the most common markers of a “chronic malaria”, and these subjects cannot be defined as “healthy”. The most severe form of chronic malaria is hyper-reactive malarial splenomegaly (HMS), a neglected condition characterized by gross splenomegaly, pancytopenia, and a severely impaired cellular immunity.[25] Plasmodia are present in blood, but at such a low parasite density that they may be missed, both by microscopy and by RDTs. This condition is invariably fatal if not adequately treated, and is probably the tip of the iceberg of the pathologic manifestations of a chronic malaria infection. A striking, indirect epidemiological indication of the harmful effects of malaria infection is the spectacular reduction of child mortality observed by some of the first trials of impregnated bed nets:[26] the prevention of specific malaria mortality explained only a fraction of this reduction, indicating that malaria infection is a predisposing factor to death from other causes. Therefore, malaria infection is likely to produce pathological effects even in the absence of fever, and when it is detected, it should probably be treated.

Diagnosis of malaria infection and disease.

Microscopy is still the gold standard for the diagnosis of malaria infection. The technique of slide preparation, staining and reading are well known and standardized, and so is the estimate of the parasite density, which is an added value of microscopy and that can easily be estimated on a thick film (Figure 2).[17] RDTs are now replacing microscopy almost everywhere in endemic countries at P.H.C. level. A lot of different tests are available on the market and their accuracy is being systematically evaluated by the W.H.O. in partnership with other organizations.[27,28] Several RDTs, both based on HRP-2 and on p-LDH, are virtually 100% sensitive at a comparatively low parasite density (200 parasites/µL), and also highly specific for P. falciparum malaria infection, some of them also for other plasmodia.

Other diagnostic methods do exist, such as the Quantitative Buffy Coat (QBC) and polymerase chain reaction (PCR), but they require adequate laboratory facilities and are not an option for routine use in endemic areas.

For practical purposes, RDTs are presently the main tool for the diagnosis of malaria in the field.

In immunochromatographic RDTs, malaria antigen is captured by monoclonal antibodies conjugated to a dye in a strip of nitrocellulose, causing a clearly visible line to appear. Most tests have a control line, that is the only one that appears in a negative test (Figure 3, a), while in the positive test a second line appears (Figure 3, b), usually within 15 minutes or less, making the reading straigthforward and reproducible, contrarily to microscopy. This is therefore an ideal tool in the field.

The systematic use of RDTs, now recommended by the WHO both for children and adults,[29] should limit the prescription of antimalarials to those who really need it, thus diminishing the costs of malaria control programmes and preserving the efficacy of artemisinin-based drug combinations. Moreover, by excluding malaria as the cause of fever in RDT-negative subjects, health workers should take into account alternative causes of fever, also considering that in many countries malaria incidence has sharply declined and only a small proportion of all fevers are due to malaria.[30-33]

The new WHO policy has been preceded by a quite hot debate in the malaria community,[34,35] and has been largely based on convincing results in countries where the decrease in malaria incidence had been dramatic, such as in Tanzania.[36,37]

The extension of this policy to countries where this decline has not yet occurred is questionable, though.

Figure 2. Giemsa stained thick film with high parasite density of P. falciparum (Photograph by Maria Gobbo, CTD Negrar)

Figure 3. HRP-2 – based RDT with positive (a) and negative (b) result (Photograph by Maria Gobbo, CTD Negrar)

Accuracy and cost effectiveness of RDTs for the diagnosis of malaria infection and of malaria-attributable fever.

RDTs are an accurate tool for the diagnosis of malaria infection.[5-10,20,25,26] Their sensitivity is sufficiently high to rule out malaria as the cause of fever in most instances, as they only miss very low parasite densities that are generally of no clinical significance. Nevertheless, in infants and young children the proportion of fevers attributable to malaria is very high even at the lowest parasite densities, that may be missed by RDTs.[20,38] Moreover, in rare occasions, even a high or very high parasite density may be missed by a RDT, due to the so-called prozone effect[39] or to other reasons. However, in general, refraining from malaria treatment in case of a negative RDT appears to be reasonably safe.[35] Specificity is a more complex matter. It has been assessed by many studies on malaria infection and generally found to be very high, meaning that when a RDT result is positive, plasmodia are in fact present in the blood. However, contrarily to traditional microscopy, RDTs do not provide any estimate of the parasite density, and their specificity for malaria-attributable fever is highly variable, being very low in some epidemiological contexts.[20] This means in practical terms that a patient with fever AND a positive RDT can have malaria, OR an alternative cause of fever. Prior to RDT introduction, clinical officers, who are most often nurses at the peripheral level of endemic countries, used diagnostic algorithms based on clinical symptoms and signs only, and they often treated a febrile patient with both an antimalarial and an antibiotic if malaria and a bacterial fever were both possible, according to their judgement. The use of RDTs should limit this “double prescription” by treating only with antimalarials those with a positive test, and with only antibiotics those who test negative for malaria, and have symptoms and signs indicating a possible bacterial cause.

While the latter option is probably reasonably safe, the former one is not. If a child with a fever due, say, to pneumonia is tested, and the RDT result is positive, the nurse may be suggested to treat only for malaria, while on clinical grounds he/she would have probably treated with both options.

As far as cost effectiveness is concerned, most studies have concluded that a RDT-based malaria management policy is cost-effective if compared to the presumptive management.[40-47] However, a major flaw of most of these studies was the implicit assumption health workers would fully adhere to the (negative) test result, while at least in some countries it was clearly shown that a high proportion of febrile patients testing negative were nevertheless treated for malaria.[48,49] Other researchers found more encouraging results, however in contexts where malaria incidence had dropped.[34,50] Where and when malaria incidence is still high, such as in most of West Africa in the rainy season, a RDT-based policy was shown to be less cost-effective than the presumptive approach, and potentially harmful, especially for the possible consequences of a false positive result on the antibiotic prescription for non malarial febrile illnesses.[51]

A further limitation to cost-effectiveness of RDTs is the general tendency of using them on patients without fever, too,[48,52] causing a waste of resources. Moreover, although we have seen that malaria infection without fever is not necessarily harmless, extending the use of antimalarials to afebrile patients would increase the risk of selection of drug resistant strains, that is exactly what the test-based policy should limit, instead.

An evidence-based approach to malaria diagnosis and management.

Malaria management policies cannot be the same everywhere, regardless the epidemiological context. Where malaria incidence is (or has become) low or very low, RDTs have clearly a key role in limiting unnecessary malaria prescriptions. Refraining from malaria treatment in such contexts is most probably safe.[34,35] Health workers should however be properly trained on how to deal with the positive result: if the clinical picture is suggestive of a possible bacterial cause of fever, a double treatment (antimalarial plus antibiotic) is justified, as a positive RDT might be a false positive in clinical terms, by detecting malaria infection in a patient who actually has another acute disease. Clinical guidelines, including the Integrated Management of Childhood Ilnesses (IMCI), should introduce RDTs at the right step. The node on treatment decision for NMFI should come before the RDT node in clinical algorithms, and the RDT result should be used as a guide for malaria treatment, but not to exclude other potential causes of fever.[49]

In countries targeted for malaria elimination,[53] RDTs and, potentially, new and even more sensitive diagnostic tools, may also be useful to identify pockets of infected people, regardless the presence of fever, and treat them, both to their benefit and that of the community. [54]

In contrast, in countries, or areas within countries, where malaria is still hyperendemic or holoendemic, the presumptive, clinical approach should be maintained, at least for children, and RDTs should not be used. For example, in a recent study in Burkina Faso during the rainy season, it was found that almost 90% of febrile children had a positive RDT.[20] The device tested was Paracheck® Device, that is not one of the most sensitive RDTs according to W.H.O. assessment.[25,26] With a more sensitive test the percentage of positive results would have probably approached 100%, making it totally useless as a decisional tool. It is clear that in such a situation using the test would only add the unnecessary costs of the test to that of the treatment, that would be administered to febrile children anyway.

Diagnosing imported malaria in non endemic countries.

What might be a correct approach for suspected imported malaria in a non endemic country? In this field of medicine, except for patients recently immigrating from holo-endemic countries, malaria infection equals malaria disease. Here unlimited diagnostic and therapeutical means are the rule. Comparison between different diagnostic tools is difficult: if microscopy remains the gold standard (reference test), the judgment will depend on how many fields have been examined. Currently more or less 100 high magnification fields are standard, but in some cases a diagnosis is made after careful scrutinizing a thick film during one or two hours, to find finally only one parasite! This, especially when P.ovale is at stake, or when a treatment, even a not specific one, e.g. with an antibiotic with antimalarial action such as cotrimoxazole, has already been started. PCR is highly sensitive, but will probably not outperform this lengthy microscopical search. A multi centre diagnostic study carried out by GISPI network in Italy confirmed that expert microscopy still remains the mainstay of the diagnosis of imported malaria, and this, particularly in mixed or in non falciparum infections, where it still outperforms alternative methods, including PCR.[55]

However, advanced diagnostic tools and expertise are not necessarily available in all care centers. In peripheral centers PCR is not available, and lab technicians lack expertise in microscopy. Moreover, malaria treatment should be initiated as soon as possible; diagnosis cannot wait until the next morning. As a consequence, RDTs have a substantial role in imported malaria: they are easy to perform, recent brands distinguish between falciparum and non falciparum, they have a sensitivity high enough to exclude a clinically important, imminent life threatening, falciparum infection. A further advantage is that a recent treatment does not necessarily hamper RDT sensitivity. National quality control of accuracy, compared to thick film simultaneously taken, is imperative since many caveats exist for correct use and interpretation.

Conclusions and research needs.

In an expert meeting at the European Commission DG research in 2010,[56] experts were asked what the most relevant research needs on malaria diagnostics were. The basic conclusion was that we have already good tools for the diagnosis of malaria infection. However, in the context of malaria elimination programmes, research should focus on the development of even more sensitive tools, and ones that are able to detect also asymptomatic carriers (of gametocytes and not only asexual forms), thus contributing to detect pocket of infected individuals that can be successfully treated.[57] For clinical management however, existing tools are already enough sensitive, as they are able to detect the vast majority of malaria-attributable fevers. The problem is the lack of specificity for clinical malaria, especially in high transmission settings. An ideal diagnostic tool would detect malaria infection as well as identify biomarkers of clinically significant malaria (capable of distinguishing disease from simple infection). Potential biomarkers have already been identified. In addition, an improved RDT for malaria would be able to give a semi-quantitative assessment of the parasite density. Alternatively, research should focus on incorporating in the same device markers of more than one disease, for example with the addition of a biomarker of bacterial disease to a malaria RDT.

One may wonder, though, if excessive reliance in new technology will not cause the definitive abandon of basic, microscopy-based laboratory in the field, that could still be an invaluable tool not only for the diagnosis of malaria, but also of TB, meningitis and other diseases, provided that adequate training, supervision and quality control are ensured.

References

- W.H.O. 2005. Hanbook IMCI. Integrated Management of Childhood Illness. http://books.google.it/books?id=4Zhk4Y6UshcC&pg=PA56&hl=it&source=gbs_toc_r&cad=4#v=onepage&q&f=false, accessed 12th December, 2011

- W.H.O. 2006. WHO guidelines for treatment of malaria. Geneva: WHO; 2006. http://helid.digicollection.org/pdf/s13418e/s13418e.pdf, accessed 12th December, 2011

- Shiff CJ, Premji Z, Minjas JN. The rapid

manual ParaSight-F test. A new diagnostic tool for Plasmodium

falciparum infection. Trans R Soc Trop Med Hyg. 1993;87:646–648 http://dx.doi.org/10.1016/0035-9203(93)90273-S

- Bartoloni A, Bartoloni A., Sabatinelli G.,

Benucci M. (1998). Performance of two rapid tests for Plasmodium

falciparum malaria in patients with rheumatoid factors. New England J.

Medicine, 338, 1075 http://dx.doi.org/10.1056/NEJM199804093381518 PMid:9537883

- Guthmann JP, Ruiz A, Priotto G et al.

(2002) Validity, reliability and ease of use in the field of five rapid

tests for the diagnosis of Plasmodium falciparum malaria in

Uganda.Trans.R.Soc.Trop.Med Hyg. 96, 254-257 http://dx.doi.org/10.1016/S0035-9203(02)90091-X

- Singh N & Saxena A (2005) Usefulness of

a rapid on-site Plasmodium falciparum diagnosis (Paracheck PF) in

forest migrants and among the indigenous population at the site of

their occupational activities in central India.Am.J Trop.Med Hyg. 72,

26-29. PMid:15728862

- Singh N, Saxena A, Awadhia SB, Shrivastava

R, & Singh MP (2005) Evaluation of a rapid diagnostic test for

assessing the burden of malaria at delivery in India. Am.J Trop.Med

Hyg. 73, 855-858. PMid:16282293

- van den Broek I, Hill O, Gordillo F et al.

(2006) Evaluation of three rapid tests for diagnosis of P. falciparum

and P. vivax malaria in Colombia.Am.J Trop.Med Hyg. 75, 1209-1215.

PMid:17172395

- Swarthout TD, Counihan H, Senga RK, &

van dB, I (2007) Paracheck-Pf accuracy and recently treated Plasmodium

falciparum infections: is there a risk of over-diagnosis? Malar.J 6,

58. http://dx.doi.org/10.1186/1475-2875-6-58 PMid:17506881 PMCid:1890550

- Murray CK, Gasser RA, Jr., Magill AJ,

& Miller RS (2008) Update on rapid diagnostic testing for

malaria.Clin Microbiol.Rev. 21, 97-110. http://dx.doi.org/10.1128/CMR.00035-07 PMid:18202438 PMCid:2223842

- Krotoski WA. The hypnozoite and malarial relapse. Prog Clin Parasitol. (1989); 1:1-19 PMid:2491691

- Miller LH, Baruch D, Marsh K, Doumbo OK. The pathogenic basis of malaria. Nature February 2002;415(7):673-679. http://dx.doi.org/10.1038/415673a PMid:11832955

- McGregor IA. The development and

maintenance of immunity to malaria in highly endemic areas. Clinical

Tropical Medicine and Communicable Diseases. 1986;1:29–53

- Ogutu B, Tiono AB, Makanga M, Premji Z,

Gbadoe AD, Ubben D, Marrast AC, Gaye O: Treatment of asymptomatic

carriers with artemether-lumefantrine: an opportunity to reduce the

burden of malaria? Malar J 2010, 9:30. http://dx.doi.org/10.1186/1475-2875-9-30 PMid:20096111 PMCid:2824802

- Baliraine FN, Afrane YA, Amenya DA,

Bonizzoni M, Menge DM, Zhou G, Zhong D, Vardo-Zalik AM, Githeko AK, Yan

G: High prevalence of asymptomatic Plasmodium falciparum infections in

a highland area of western Kenya: a cohort study. J Infect Dis 2009,

200:66-74. http://dx.doi.org/10.1086/599317 PMid:19476434 PMCid:2689925

- Schellenberg JR, Smith T, Alonso PL, &

Hayes RJ (1994) What is clinical malaria? Finding case definitions for

field research in highly endemic areas.Parasitol.Today 10, 439-442. http://dx.doi.org/10.1016/0169-4758(94)90179-1

- Trape JF. Rapid evaluation of malaria

parasite density and standardization of thick smear examination for

epidemiological investigations. Transactions of the Royal Society of

Tropical Medicine and Hygiene. 1985;79:181–1 http://dx.doi.org/10.1016/0035-9203(85)90329-3

- Luxemburger C, Nosten F, Kyle DE,

Kiricharoen L, Chongsuphajaisiddhi T, White NJ. Clinical features

cannot predict a diagnosis of malaria or differentiate the infecting

species in children living in an area of low transmission. Trans R Soc

Trop Med Hyg. 1998 Jan-Feb;92(1):45-9. http://dx.doi.org/10.1016/S0035-9203(98)90950-6

- Rothman K; Greenland S (1998). Modern Epidemiology, 2nd Edition. Lippincott Williams & Wilkins.

- Van den Ende J, Lynen L. Diagnosis of

malaria disease. In: Carosi G, Castelli F, editors. Handbook of malaria

infection in the tropics. Bologna: Associazione Italiana 'Amici di R.

Follereau' (AIFO); 1997. p. 137-150.

- Alonso PL, Lindsay SW, Armstrong

Schellenberg JRM, Keita K, Gomez P, Shenton FC, Hill AG, David PH,

Fegan G, Cham K, Greenwood BM: A malaria control trial using

insecticide-treated bed nets and targeted chemoprophylaxis in a rural

area of the Gambia, West Africa. 6. The impact of interventions on

mortality and morbidity from malaria. Trans R Soc Trop Med Hyg 1993,

87(Supplement 2):37-44 http://dx.doi.org/10.1016/0035-9203(93)90174-O

- Bisoffi Z, Sirima SB, Menten J, Pattaro C,

Angheben A, Gobbi F, Tinto H, Lodesani C, Neya B, Gobbo M, Van den Ende

J. Accuracy of a rapid diagnostic test on the diagnosis of malaria

infection and of malaria-attributable fever during low and high

transmission season in Burkina Faso. Malar J. 2010; 9:192 http://dx.doi.org/10.1186/1475-2875-9-192 PMid:20609211 PMCid:2914059

- Stauffer W, Fischer FW. Diagnosis and Treatment of Malaria in Children. Clin Infect Dis. (2003) 37 (10): 1340-1348. http://dx.doi.org/10.1086/379074 PMid:14583868

- Chaves LF, Taleo G, Kalkoa M, Kaneko A.

Spleen rates in children: an old and new surveillance tool for malaria

elimination initiatives in island settings. Trans R Soc Trop Med Hyg.

2011 http://dx.doi.org/10.1016/j.trstmh.2011.01.001 PMid:21367441

- Crane GG.Hyperreactive malarious splenomegaly (tropical splenomegaly syndrome). Parasitol Today. 1986;2(1):4-9. http://dx.doi.org/10.1016/0169-4758(86)90067-0

- D'Alessandro U, Olaleye BO, McGuire W,

Langerock P, Bennett S, Aikins MK, Thomson MC, Cham MK, Cham BA,

Greenwood BM. Mortality and morbidity from malaria in Gambian children

after introduction of an impregnated bednet programme. Lancet. 1995 Feb

25;345(8948):479–483. http://dx.doi.org/10.1016/S0140-6736(95)90582-0

- WHO. Malaria Rapid Diagnostic Test Performance round 1. Results of WHO product testing of malaria RDTs (2009).Geneva: WHO. 2010.

- WHO. Malaria Rapid Diagnostic Test Performance round 2. Results of WHO product testing of malaria RDTs (2009). Geneva: WHO. 2010.

- WHO 2010. Guidelines for the treatment of malaria second edition. Geneva: WHO. 2010. http://www.who.int/malaria/publications/atoz/9789241547925/en/index.html, accessed 12th December, 2011

- Jaenisch T, Sullivan DJ, Dutta A, Deb S,

Ramsan M, Othman MK, Gaczkowski R, Tielsch J, Sazawal S: Malaria

incidence and prevalence on Pemba Island before the onset of the

successful control intervention on the Zanzibar archipelago. Malar J

2010, 9:32 http://dx.doi.org/10.1186/1475-2875-9-32 PMid:20100352 PMCid:2835718

- Mmbando BP, Vestergaard LS, Kitua AY,

Lemnge MM, Theander TG, Lusingu JP: A progressive declining in the

burden of malaria in north-eastern Tanzania. Malar J 2010, 9:216 http://dx.doi.org/10.1186/1475-2875-9-216 PMid:20650014 PMCid:2920289

- O'Meara WP, Bejon P, Mwangi TW, Okiro EA,

Peshu N, Snow RW, Marsh K: Effect of a fall in malaria transmission on

morbidity and mortality in Kilifi, Kenya. Lancet 2008, 372:1555-1562 http://dx.doi.org/10.1016/S0140-6736(08)61655-4

- Chizema-Kawesha E, Miller JM, Steketee RW,

Mukonka VM, Mukuka C, Mohamed AD, Miti SK, Campbell CC: Scaling up

malaria control in Zambia: progress and impact 2005-2008. Am J Trop Med

Hyg 2010, 83:480-488. http://dx.doi.org/10.4269/ajtmh.2010.10-0035 PMid:20810807 PMCid:2929038

- D'Acremont V, Lengeler C, Mshinda H,

Mtasiwa D, Tanner M, Genton B. Time to move from presumptive malaria

treatment to laboratory-confirmed diagnosis and treatment in African

children with fever. PLoS Med 2009; 6(1):e252. http://dx.doi.org/10.1371/journal.pmed.0050252 PMid:19127974 PMCid:2613421

- English M, Reyburn H, Goodman C, Snow RW.

Abandoning presumptive antimalarial treatment for febrile children aged

less than five years--a case of running before we can walk? PLoS Med

2009; 6(1):e1000015. http://dx.doi.org/10.1371/journal.pmed.1000015 PMid:19127977 PMCid:2613424

- Msellem MI, Martensson A, Rotllant G,

Bhattarai A, Stromberg J, Kahigwa E et al. Influence of rapid malaria

diagnostic tests on treatment and health outcome in fever patients,

Zanzibar: a crossover validation study. PLoS Med 2009; 6(4):e1000070. http://dx.doi.org/10.1371/journal.pmed.1000070 PMid:19399156 PMCid:2667629

- D'Acremont V, Aggrey M, Swai N, Tyllia R,

Kahama-Maro J, Lengeler C, Genton B: Withholding antimalarials in

febrile children with a negative Rapid Diagnostic Test is safe in

moderately and highly endemic areas of Tanzania: a prospective

longitudinal study. Clin Infect Dis 2010, 51:506-511 PMid:20642354

- McGuinness D, Koram K, Bennett S, Wagner

G, Nkrumah F, Riley E. Clinical case definitions for malaria: clinical

malaria associated with very low parasite densities in African infants.

Trans R Soc Trop Med Hyg 1998; 92(5):527-531. http://dx.doi.org/10.1016/S0035-9203(98)90902-6

- Gillet P, Mori M, Van Esbroeck M, Van den

Ende J, Jacobs J. Assessment of the prozone effect in malaria rapid

diagnostic tests. Malar J. 2009;8:271 http://dx.doi.org/10.1186/1475-2875-8-271 PMid:19948018 PMCid:2789093

- Lubell Y, Reyburn H, Mbakilwa H, Mwangi R,

Chonya S, Whitty CJ, Mills A: The impact of response to the results of

diagnostic tests for malaria: cost-benefit analysis. BMJ 2008;

336:202-205 http://dx.doi.org/10.1136/bmj.39395.696065.47 PMid:18199700 PMCid:2213875

- Bualombai P, Prajakwong S,

Aussawatheerakul N, Congpoung K, Sudathip S, Thimasarn K,

Sirichaisinthop J, Indaratna K, Kidson C, Srisuphanand M: Determining

cost-effectiveness and cost component of three malaria diagnostic

models being used in remote non-microscope areas. Southeast Asian J

Trop Med Public Health 2003, 34:322-333. PMid:12971557

- Rolland E, Checchi F, Pinoges L, Balkan S,

Guthmann JP, Guerin PJ: Operational response to malaria epidemics: are

rapid diagnostic tests cost-effective? Trop Med Int Health 2006,

11:398-408. http://dx.doi.org/10.1111/j.1365-3156.2006.01580.x PMid:16553923

- Shillcutt S, Morel C, Goodman C, Coleman

P, Bell D, Whitty CJ, Mills A: Cost-effectiveness of malaria diagnostic

methods in sub-Saharan Africa in an era of combination therapy. Bull

World Health Organ 2008, 86:101-110. http://dx.doi.org/10.2471/BLT.07.042259 PMid:18297164 PMCid:2647374

- Yukich J, D'Acremont V, Kahama J, Swai N,

Lengeler C: Cost savings with rapid diagnostic tests for malaria in

low-transmission areas: evidence from Dar es Salaam, Tanzania. Am J

Trop Med Hyg 2010, 83:61-68. http://dx.doi.org/10.4269/ajtmh.2010.09-0632 PMid:20595479 PMCid:2912577

- Chanda P, Castillo-Riquelme M, Masiye F:

Cost-effectiveness analysis of the available strategies for diagnosing

malaria in outpatient clinics in Zambia. Cost Eff Resour Alloc 2009,

7:5. http://dx.doi.org/10.1186/1478-7547-7-5 PMid:19356225 PMCid:2676244

- Uzochukwu BS, Obikeze EN, Onwujekwe OE,

Onoka CA, Griffiths UK: Cost-effectiveness analysis of rapid diagnostic

test, microscopy and syndromic approach in the diagnosis of malaria in

Nigeria: implications for scaling-up deployment of ACT. Malar J 2009,

8:265. http://dx.doi.org/10.1186/1475-2875-8-265 PMid:19930666 PMCid:2787522

- Mosha JF, Conteh L, Tediosi F, Gesase S,

Bruce J, Chandramohan D, Gosling R: Cost implications of improving

malaria diagnosis: findings from north-eastern Tanzania. PLoS One 2010,

5:e8707. http://dx.doi.org/10.1371/journal.pone.0008707 PMid:20090933 PMCid:2806838

- Reyburn H, Mbakilwa H, Mwangi R et al.

(2007) Rapid diagnostic tests compared with malaria microscopy for

guiding outpatient treatment of febrile illness in Tanzania: randomised

trial.BMJ 334, 403.

- Bisoffi Z, Sirima BS, Angheben A, Lodesani

C, Gobbi F, Tinto H, Van den Ende J.Rapid malaria diagnostic tests vs.

clinical management of malaria in rural Burkina Faso: safety and effect

on clinical decisions. A randomized trial. Trop Med Int Health.

200914(5):491-8.

- D'Acremont V, Malila A, Swai N, Tillya R,

Kahama-Maro J, Lengeler C, Genton B: Withholding antimalarials in

febrile children who have a negative result for a rapid diagnostic

test. Clin Infect Dis 2010, 51:506-511. http://dx.doi.org/10.1086/655688 PMid:20642354

- Bisoffi Z, Sirima SB, Meheus P, Lodesani

C, Gobbi F, Angheben A, Tinto H, Neya B, Van den Ende K, Romeo A, Van

den Ende J. Strict adherence to malaria rapid test results might lead

to a neglect of other dangerous diseases: a cost benefit analysis from

Burkina Faso. Malar J. 2011;10:226 http://dx.doi.org/10.1186/1475-2875-10-226 PMid:21816087 PMCid:3199908

- Ansah EK, Narh-Bana S, Epokor M,

Akanpigbiam S, Quartey AA, Gyapong J, Whitty CJ: Rapid testing for

malaria in settings where microscopy is available and peripheral

clinics where only presumptive treatment is available: a randomised

controlled trial in Ghana. BMJ 2010, 340:c930. http://dx.doi.org/10.1136/bmj.c930 PMid:20207689 PMCid:2833239

- Feachem RG, Phillips AA, Targett GA, Snow

RW. Call to action: priorities for malaria elimination. Lancet (2010)

6;376(9752):1517-21.

- McMorrow ML, Aidoo M, Kachur SP. Malaria

rapid diagnostic tests in elimination settings--can they find the last

parasite? Clin Microbiol Infect. 2011 Nov;17(11):1624-31. http://dx.doi.org/10.1111/j.1469-0691.2011.03639.x PMid:21910780

- Gatti S, Gramegna M, Bisoffi Z, Raglio A,

Gulletta M, Klersy C, Bruno A, Maserati R, Madama S, Scaglia M; Gispi

Study Group.A comparison of three diagnostic techniques for malaria: a

rapid diagnostic test (NOW Malaria), PCR and microscopy. Ann Trop Med

Parasitol 2007;101(3):195-204. http://dx.doi.org/10.1179/136485907X156997 PMid:17362594

- http://ec.europa.eu/research/health/infectious-diseases/poverty-diseases/event-01-presentations_en.html

- Stresman GH, Kamanga A, Moono P, Hamapumbu

H, Mharakurwa S, Kobayashi T, Moss WJ, Shiff C. method of active case

detection to target reservoirs of asymptomatic malaria and gametocyte

carriers in a rural area in Southern Province, Zambia. Malar J. 2010

Oct 4;9:265. PMid:20920328 PMCid:2959066

- Kassa FA, Shio MT, Bellemare MJ, Faye B,

Ndao M, Olivier M. New inflammation-related biomarkers during malaria

infection. PLoS One. 2011;6(10):e26495.

- Díez-Padrisa N, Bassat Q, Roca A. Serum

biomarkers for the diagnosis of malaria, bacterial and viral infections

in children living in malaria-endemic areas. Drugs Today (Barc). 2011

Jan;47(1):63-75. Review. http://dx.doi.org/10.1358/dot.2011.47.1.1534821 PMid:21373650